Background introduction

The sharing is an intelligent monitoring solution for Ali Mom's Goldeneye business monitoring platform.

This sharing mainly includes the technical implementation of intelligent monitoring and the automated access of large-scale log monitoring data. Let me introduce the intelligent monitoring part. In the next issue, my two colleagues will give you a focus on the calculation of log analysis processing. . Smart monitoring is now being done by other companies. I hope that this sharing will bring you some new inspirations. I also welcome everyone to ask questions and suggestions and exchange experiences. ——Ma Xiaopeng

Related vendor content

The outline of the shared content is as follows: Goldeneye's business background, technical thinking, technical implementation details, difficulties and future optimization directions.

Guest introduction

Ma Xiaopeng, head of Ali's mother panoramic business monitoring platform technology. Since 2013, he has been engaged in large-scale system log analysis and application research and development in Ali, and has dominated the through-pass advertising main report platform and real-time report storage selection. Before joining Ali, he was responsible for the research and development of Netease e-commerce App data statistics platform.

First, the background of Goldeneye intelligent monitoring

As the mother monitoring platform of Ali, Goldeneye mainly performs monitoring and alarming and auxiliary positioning based on real-time statistical analysis of business logs and data. There are also many excellent monitoring platforms within the Ali Group. They do a good job in terms of openness, and the access cost is not high, but the monitoring threshold is also open to the user. In this case, the manual maintenance threshold for the service monitoring is complicated, and it requires a lot of experience to capture the threshold, and the monitoring threshold of different monitoring items needs to be manually and continuously maintained. Therefore, under the premise of rapid business development, the traditional static threshold monitoring is prone to false positives and false negatives, and the cost of manual maintenance is high and the monitoring horizon is limited. On the basis of this, Goldeneye was born from the perspective of big data application to solve the problems in business monitoring.

1. Business background:

(1) Large volume: Goldeneye now accesses 90% of the business of Ali's main body, and the amount of logs processed per day is above 100T. The business monitoring needs to monitor the traffic of each service line in real time, core data. In the cycle of 1 minute, the general monitoring data is in the cycle of 5 minutes or 1 hour. There are many monitoring targets. It is almost impossible to manually maintain the thresholds, start and stop, effective effects, etc. of these monitoring.

(2) Many changes: The monitoring data of business monitoring are mostly business indicators. Different from system operation and maintenance indicators, such as RT/QPS/TPS, etc. are generally stable, and business indicators have periodic changes, such as working days and holidays. The difference between the adjustment of the business marketing strategy, etc., in this case, the accuracy of the manually set static alarm threshold is difficult to guarantee.

(3) Iterative fast: With the integration of Alibaba's mother resources and the rapid development of business, the monitoring targets often change, such as the adjustment of the traffic monitoring resource level, the product type division of the effect monitoring, etc., and there have been monitoring after the new traffic is online. Blind spot.

2. technical background:

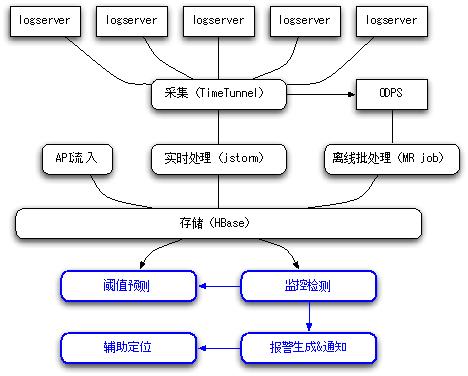

Figure 1 Goldeneye technology background

The usual business monitoring system or platform is composed of modules such as acquisition, data processing, detection, and alarm. Goldeneye is also the same, but its technical architecture uses some of the internal technical middleware of Ali, such as the collection we use TimeTunnel ( It has agents to pull logs to Topic on each log server, and is responsible for putting offline logs on ODPS. I will not introduce them in this part.

Data processing The jstorm and ODPS MR jobs we use are used for real-time and offline batch processing of logs, including log parsing, verification, time period normalization, aggregation, and write storage (HBase). My colleague will give a detailed introduction. Today's sharing focuses on four parts: threshold prediction, monitoring and detection, alarm generation & notification, and auxiliary positioning.

Second, technical thinking

Intelligent monitoring is to let the system replace the manual execution and judgment process in some aspects of business monitoring. Manual maintenance monitoring targets and thresholds are based on experience. How the system automatically determines which targets need to be monitored, automatically sets the threshold water level of the monitoring targets, and does not require manual maintenance. It is based on statistical analysis of historical sample data.

By collecting samples of monitoring data and using the intelligent detection algorithm model, the program automatically predicts the baseline value and threshold of the monitoring item index, and uses the rule combination and the mean value drift algorithm to detect and determine the abnormal alarm, which can accurately determine the alarm required. Abnormal points and change points.

1. Threshold water level adaptive change

In the past, we added monitoring in two ways:

Set a water level line for indicator M1, lower (or higher) than the water level, triggering an alarm;

Set the year-on-year and ring fluctuations of the indicator M1, such as a 20% year-on-year fluctuation and a 10% ring fluctuation to trigger an alarm;

The above two methods are commonly used monitoring methods, but the effect is not ideal. This static threshold does not have the ability to adapt to changes in the long run, requires manual maintenance, and the alarm accuracy depends on the stability of the same ring data.

Can we make the system automatically adapt to changes and automatically adjust the threshold water level? Just like a manual car with an automatic gear, you can adjust the gear according to the speed.

2. Automatic monitoring of monitoring items

When our monitoring system has the ability to predict dynamic thresholds, can maintenance of monitoring items be handed over to the system?

Some people may have encountered similar situations. The old monitoring items have no data. The new monitoring targets have been missed for various reasons. Manual maintenance monitoring items need to synchronize the online and offline changes in time, but when we need to monitor When there are thousands, 10,000 or more targets, manpower can't keep up with the maintenance of these monitoring items, or it can be ignored.

Can we pass the rules for judging how to screen the monitoring items to the system, let it periodically check which monitoring items have been effective, which monitoring items need to be added, and which monitoring items need to be adjusted. This discovery rule is stable, and only the content of the monitoring items based on the discovery rules is constantly changing.

3. When filtering false positives,

When our monitoring system has the ability to predict dynamic thresholds, automatically discover and maintain monitoring items, how to achieve a balance between no false negatives and no false positives?

For monitoring, false negatives are not tolerated, but too many false positives can easily lead to numbness.

The usual practice is to prevent the numbness from being misinterpreted, and the threshold will be adjusted loosely, but this practice is prone to underreporting, especially if the decline is not obvious.

Goldeneye's idea is to reduce the false positive rate based on the false positives. First monitor the occurrence of suspected abnormal points. In this part, we are relatively strict based on the dynamic threshold detection (or the problem of alarm convergence is not considered in this link), and then verify and filter these suspected abnormal points, and finally generate an alarm notification. The basis for verification and filtering is pre-defined rules, such as indicator combination judgment, alarm convergence expression, and so on.

Third, the technical implementation details

The following introduces some details of the technical implementation, which is divided into five parts: the architecture of the monitoring system, dynamic threshold, change point detection, intelligent panorama, and auxiliary positioning.

1, the overall introduction

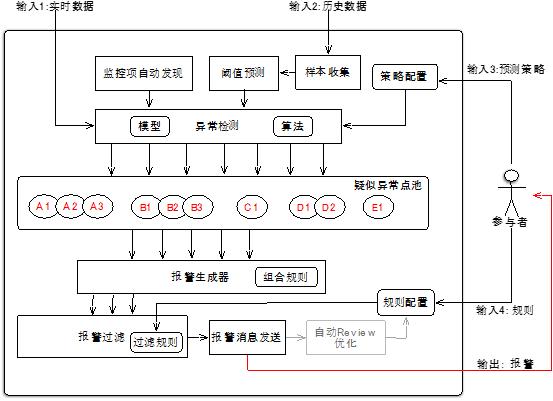

Four inputs to the Goldeneye monitoring system: real-time monitoring data, historical data, forecasting strategies, and alarm filtering rules.

Among them, historical data is the accumulation of real-time monitoring data.

The forecasting strategy mainly includes:

(1) Threshold parameter: setting a coefficient based on the predicted reference value to determine a threshold upper and lower limit interval, a time division threshold prediction coefficient, and a sub-alarm sensitivity threshold prediction coefficient;

(2) Predicted parameters: the number of samples, the Gaussian function water level or filtering ratio filtered by the abnormal sample, and the confidence of the sample segmentation selection based on the mean shift model.

The alarm filtering rules are mainly used to filter unnecessary alarms while fully capturing suspected abnormal points. For example, the indicator M1 is abnormal, but the combination rule is that the M1 and M2 are abnormal at the same time, and this will be filtered out. For example, according to the alarm convergence rule, the first, second, tenth, and 50th consecutive alarms of a monitoring item are worthy of attention. You can set the convergence expression to 1, 2, 10, 50, then the alarm notification. When generating 3, 4, ..., 9, 11, 12, ..., 49 alarms can be ignored, because repeated notifications are of little significance, this rule can achieve automatic convergence as needed. It is also possible to merge into one alarm according to rules when multiple instances of the same monitoring item occur at the same time. These rules can be implemented on a case-by-case basis. The ultimate goal is to expose the most interesting alarms in the most concise manner.

(Additionally, we have recently considered the new convergence method, alarming the first and last ones, and automatically calculating the cumulative gap, so that the start and end of the anomaly is more obvious)

Figure 2 Goldeneye alarm system architecture

2, dynamic threshold

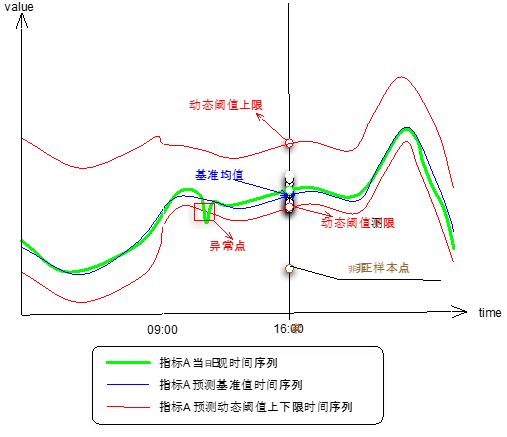

The monitoring uses the control chart to visualize the time series of the monitoring indicators so that people can clearly see the fluctuations of the indicators. Based on the control chart monitoring, many of the past are static threshold methods, such as the static water level line mentioned above and the same ring ratio. The dynamic threshold is used to control each point of the time series of the graph, and the reference value, the upper threshold, and the lower threshold of the indicator at the corresponding moment of the point are estimated, so that the program can automatically determine whether there is an abnormality. Because this estimate is based on even more historical samples from the past few months, the accuracy of the two data as a reference is higher than the same. The theoretical basis for dynamic threshold prediction is the Gaussian distribution and the mean shift model.

Figure 3 Dynamic Threshold Principle

The main steps of dynamic threshold prediction are as follows:

(1) Sample selection: According to your own needs, it is generally recommended to select samples from the past 50 days or so.

(2) Screening of abnormal samples: This process mainly uses a Gaussian distribution function to filter out samples whose function value is less than 0.01, or whose absolute value of standard deviation is greater than 1.

(3) Sample interception: Because of our optimized version, we use the mean shift model on the basis of (2) to perform segmentation test on the time series of historical samples. If there is periodic variation, or continuous monotonous change, it will be repeated. The iterative mean shift model looks for the mean drift point and then intercepts the first segment closest to the current date (or can be understood as the most stable sample sequence in the most recent period). There is also a problem to be noted in the sample selection. The samples of holidays and working days should be selected separately. The threshold of the working day should be selected to select the sample of the working day. The holidays are also the same, that is, the forecast samples are from date, weekend and stationarity. Dimension split selection.

(4) Predicted reference value: After screening and intercepting (2) and (3), the remaining samples are basically the most ideal samples. On this basis, the order of the samples on the date is maintained, and the exponential smoothing method is used. The reference value of the target date is predicted, and after obtaining the reference value, the upper and lower thresholds are calculated based on the sensitivity or the threshold coefficient.

(Additional note: The fourth step predicts the benchmark value. Some people may have used the exponential smoothing method before. In the fourth step, we are similar in the weighting of the sample weights, but their predictions are not satisfactory because there is no overall sample. Full filtering to select the most stable sample set)

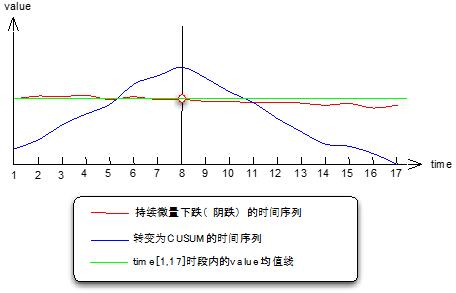

3, change point detection

The dynamic threshold uses the statistical analysis method to solve the false alarm false negative report of the static threshold, which saves the cost of manual maintenance and reduces the monitoring risk to some extent. However, in the face of micro-fluctuation and persistent yin failure, the dynamic threshold is also limited. The threshold interval is too tight, and the interval easing will miss some less significant failures. When reviewing the missing case, we found that these micro-fluctuation eyes can observe the trend from the control chart, but the judgment of the program through the threshold interval breakdown is difficult to control, so the mean shift model is introduced to find the change point. The so-called change point is that the continuous micro-fall falls to a certain time, and after the cumulative change amount reaches a certain level, the mean value of the monitoring index before and after the change point drifts over a period of time.

Figure 4 Principle of mean shift

As can be seen from the above figure, the algorithm principle of the mean shift model is actually to convert the yin trend that is not easily recognized by the program into the CUSUM time series. Its trend is obvious, monotonously increasing on the left side of the change point, monotonous on the right side. Subtraction, the CUSUM time series describes the cumulative variation of each point of the monitored time series deviating from the mean. Its law is from S0=0 to the end of Sn=0, and the change point monotonically changes.

CUSUM=Cumulative Sum. Cumulative sum is used to detect data points where anomalies begin to occur in a relatively stable data sequence. The cumulative and most typical application is to convert the detection of the parametric change in "Change Detection" and then use the program to find the first derivative of each point on the CUSUM sequence, from continuous to continuous reduction. It is judged as a change point. As for how many points continue to increase and decrease, it is set by itself.

Regarding the mean-shift model used for change point detection, you can go online to find paper. I can't find it on this computer. The above mainly explains the principle of finding change points. Generally speaking, it is easy to solve the problem. The state of the yin line is not easy to quantify and judge. Then use the shape of "Mt. Fuji" in the CUSUM control chart to find it. This is my personal popular explanation.

As mentioned above, we use the first derivative of each point on the CUSUM sequence to determine whether the inflection point (change point) is coming. In fact, this example is ideal. When I applied the mean-shift model, I encountered some complicated situations. For example, this picture is a "mountain tip", but there will be more than one. In this case, the problem will be transformed again. For example, CUSUM can be further differentiated, or the state value of the first derivative can be recorded in our practice. It can be determined from the continuous N positive values ​​to the continuous N negative values.

In addition, the algorithm of the change point detection is inconvenient to explain in detail here, in which the change point can set the number of iterations and the confidence level according to the actual situation during repeated iterations, which helps to improve the accuracy of the change point discovery.

4, smart panorama

Change point detection makes up for the lack of dynamic threshold detection of fine microwave motion. The combination of the two methods can basically achieve the balance of no false negatives and no false positives, and does not require manual long-term maintenance. This is the basis of intelligent panoramic monitoring. When the human cost of monitoring is saved, in theory we can rely on intelligent monitoring to open up the monitoring horizon without restriction, and link these monitoring alarms together.

The automatic discovery rules of the monitoring items, such as the real-time monitoring of the dimension M of the dimension D, may be 1000 dimension values ​​under the dimension D, and 1000 varieties that are constantly changing. How to let the program automatically maintain the monitoring items? You can make a rule, for example, the indicator M>X thinks that it needs to be monitored (after all, not all of them need to monitor the alarm, at least in the current situation where the fault location processing is not fully automated, the alarm processing also requires a certain amount of manpower). Under the condition of satisfying M>X, in order to improve the alarm accuracy, we also need to distinguish the alarm sensitivity according to the importance, that is, for the macroscopic and core dimension values, we hope to monitor the fluctuations very sensitively, and for the non-important dimension values. We predict that the threshold can be looser, and these can be set by the threshold parameters mentioned above.

(Note: I will only give you an example of this rule. You can implement some rules according to your actual situation, such as monitoring the system operation and maintenance level, some of them are judged according to the speed or risk coefficient of the distance fault, then you can Developed around this indicator, if the monitoring of disk utilization is the ratio of capacity growth rate to remaining resources as a reference, etc.)

After the above conditions are met, the intelligent panoramic monitoring can basically run, but we have encountered some other problems, such as the business side needs access monitoring, but it does not necessarily have to parse the log, they have their own data, maybe Is the database, interface returns, messages in the message middleware, and so on. Therefore, we use hierarchical access in data access, which can be accessed from the log standard output format, the stored time series schema convention, and the threshold prediction interface. This content will be shared by my colleagues at the next sharing. Introduced separately. The reason why it is mentioned here is because there are more data for panoramic monitoring access, so the access route should be hierarchical and flexible.

5, assisted positioning

The ultimate goal of the alarm is to reduce the loss, so the cause of the positioning problem is particularly important. Goldeneye tries to use the program to perform routines for manual positioning reasons. Of course, these routines are generated through configuration, but they have not reached the point of machine learning. However, when more and more business monitoring indicators are connected, the indicator system is gradually improved. Later, through statistical correlation analysis, the generation of these routines may also allow the program to complete. Here I introduce a few routines that the program can perform at the manual summary.

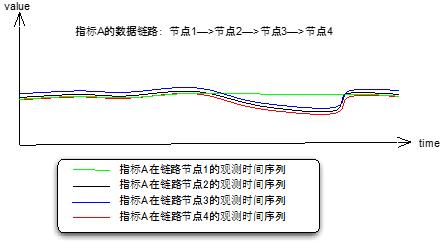

(1) Full link analysis

From the perspective of technical architecture and business processes, whether our monitoring indicators are normal or not, from external factors analysis, it will generally be affected by its upstream. According to this idea, one by one analyzes whether the upstream is normal or not, and a link is formed. There are many examples of this, such as the QPS of modules A, B, C, D, and E of the system architecture.

Figure 5 full link tracing

(In other words, there are two ways of data logging for full-link analysis, or each node is transparently transmitted internally, and spliced ​​into a complete link processing information record to the final node log; or asynchronously each node pushes information to Middleware)

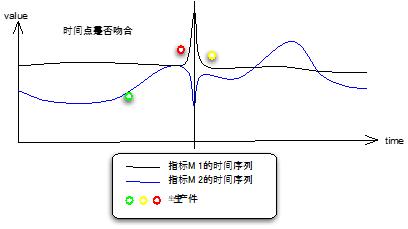

(2) What happened at the alarm time?

This is the response of most people after receiving the monitoring alarm. We collect the operation and maintenance events and operational adjustment events as much as possible, and combine the scatter plots of these events with the control charts of the monitoring alarms to see the problem. If the program is completed automatically, the time point at which the event occurred is also normalized to the fixed time point in the same way, and the check is consistent with the alarm time point.

Figure 6 Production events and time series

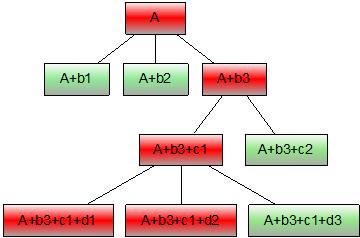

(3) A/B test or TopN

Some people locate problems and use exclusions to narrow down the problem. For example, in the dimension D, the indicator M has abnormal fluctuations, and D can be divided into D1, D2, and D3 for comparison. Common cases such as computer room comparison, group comparison, version comparison, terminal type comparison, etc., if monitoring data level On a clear basis, we can drill the data layer by layer for A/B test until the specific reason is located. There is another way, instead of doing the A/B test by enumeration, but directly targeting the indicator M, listing the sub-dimensions of the dimension D, D1, D2, D3, ..., the TopN of the indicator M, find out the most Highlight several key points.

Figure 7 A/B test or TopN

Topn is similar. As you can see, intelligent monitoring and assisted positioning are supported by a clear data hierarchy and metadata management system. This is very basic.

(4) Related indicators

Different indicators are continuous time series in monitoring. Some indicators are functional relationships. For example, ctr=click/pv, changes in click and pv will inevitably lead to changes in ctr. This connection is directly described by the function. There are also some associations of indicators that cannot be described by functional formulas. The correlation between them is measured by statistical indicators, such as the Pearson coefficient. Goldeneye's indicator correlation basis, there is no automatic analysis at present, and it is manually set according to experience. It is only the view that allows the program to complete the tracking and positioning process. For example, if the indicator M1 has an abnormal alarm, it can trigger the detection of the relevant indicator RMG1/RMG2/RMG3 ( Because these indicators may not require 7*24 hours to monitor the alarm, only check when needed, and so on.

Figure 8 Relevant indicators

These methods may also try to do some programmatic processing. I personally think that the way to correlate indicators is based on the construction of an indicator system. This construction process can be a combination of manual experience and program statistical analysis. The indicator system can at least describe The classification of indicators, the source of the data, the specific meaning, the weight of the relevant indicators, etc., can be accomplished by applying statistical analysis methods.

Fourth, difficult

1. Time series smoothing

The smoothing of the time series is very important for the accuracy of the prediction. However, most of our business monitoring time series are not smooth, with a 5-minute monitoring period as the force, except for the large-cap and core monitoring sequences, others. Time series are normally fluctuating within a certain range but the overall trend is stable. The method we currently use is:

(1) Moving average, such as wave sawing is obvious, and it is easy to cause false alarm interference. Increase the monitoring and monitoring period and increase the 5 minutes to 30 minutes, which is equivalent to fitting the data of 6 time windows to smooth the time series.

(2) Continuous alarm judgment, if you think that the problem will be late in 30 minutes, you can test it in 5 minutes. The sawtooth fluctuation is easy to alarm, but you can send the alarm three times in a row, thus avoiding false alarms of sawtooth fluctuation.

(3) For the case where the mean shift is required to detect the fine microwave motion, the 24-hour time series itself has a peak and a low flow. This case is generally smoothed by the difference method, and is grasped by using several orders of difference. Goldeneye does not use the difference method directly, because we have predicted the reference value, so we use the gap sequence of the actual monitored value and the reference value as the input sample for the change point monitoring.

2, buried point cost

The monitoring data of the business monitoring is mainly the log, the business system module spits out to the middleware, and the collection interface is pushed. It seems that the data from the system modules is much smaller than the direct write to the disk, but the request pressure is relatively large. System, open bypass write data even if it is a memory level, there is a certain overhead.

The solution to this problem is data sampling, which is directly sampled by percentage for monitoring data that is evenly distributed over time.

3. Data standardization

Although data access is layered and open, we have developed standard data formats, such as time series data storage schema, scalable log message proto format, in which the business line can be distinguished. Some standard monitoring dimension information such as product, traffic type, computer room, and version. The purpose of this is to link the monitoring data to the correlation analysis of the fault location indicators.

However, the advancement of these standards requires the recognition and support of many participants, and even the reconstruction of their system architecture seems to be more difficult.

The current solution is to encapsulate the monitoring data in a standardized message format and then ensure that there is standard schema and interface access in the Goldeneye storage layer.

V. Future optimization direction

The time series forecasting model, the current model only considers the factors of date, holiday/weekend, and time period, and there is no factor of year-on-year trend, large-scale activity impact, and operational adjustment, which needs to be abstracted.

The indicator correlation is determined by a statistical analysis program.

Packing Machine Staple Gun And Power Tools

Abs Shell Plastic Film Sealer,Brad-Nailer,Electric Drill,Cut-Off Machine

SHAOXING SINO IMPORT & EXPORT CO.,LTD , https://www.sxsmarto.com

![<?echo $_SERVER['SERVER_NAME'];?>](/template/twentyseventeen/skin/images/header.jpg)